In a prior article (“Making the best of SkillGym Analytics”), we already explored how powerful SkillGym analytics can be when it comes to turning conversations into metrics.

We have seen that there are several ways to measure one’s conversational performance, from the broadest “confidence” and “self-awareness,” down to the most detailed competency model KIPs.

However, one of the main challenges when practicing is finding the exact connection between what was said during the conversation and the result in terms of scoring competencies.

This is where the Augmented Replay becomes helpful. Let’s see how this amazing tool can help you quickly improve your confidence, self-awareness and overall performance by analyzing your past conversations.

Enter Augmented Replay

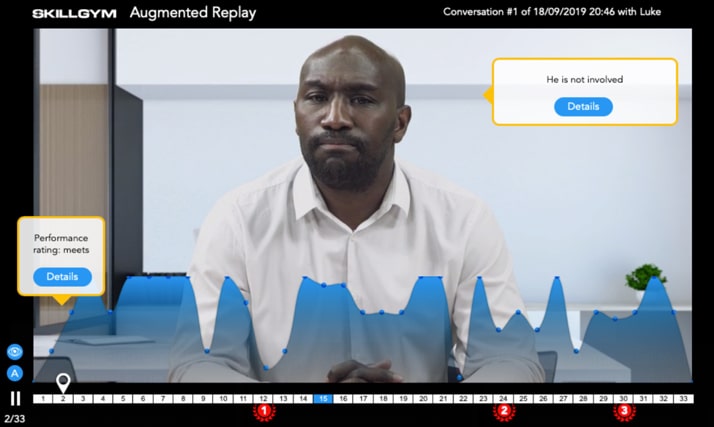

SkillGym’s Augmented Replay allows the entire conversation to be reviewed after it has been played. The idea is to attend and listen to the action from a third-party position in order to review the performance and reflect on the details.

The two main aspects that users often want to review are:

- Their own behaviors throughout the conversation. Imagine a way to review each sentence, identify the underlying predominant behaviors and rate their application in terms of quality (along with some input on what other ways were available to deal with that specific step of the conversation).

- The character’s body language. This is one of the main challenges for most everyone: recognizing other people’s body language. The most advanced Digital Role Play platform allows for this feature.

Since this article focuses on the former item, let’s start by saying that the most intuitive, still most powerful feature of Augmented Replay is the possibility to start/stop the action and move along the conversation to find the specific steps that you would like to review.

It may seem trivial, but before entering the Augmented Replay, the user experience is that of real-time action with no possibility to step back during the conversation. And it makes good sense since, while practicing, we want users to feel the authenticity of real life.

But once the real-time practice is completed, having the option to browse through the conversation is essential to be able to reflect, discuss and learn.

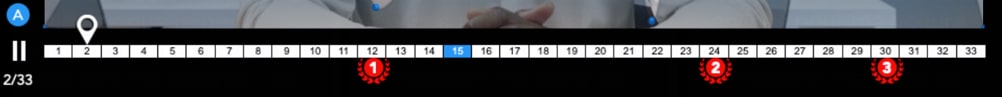

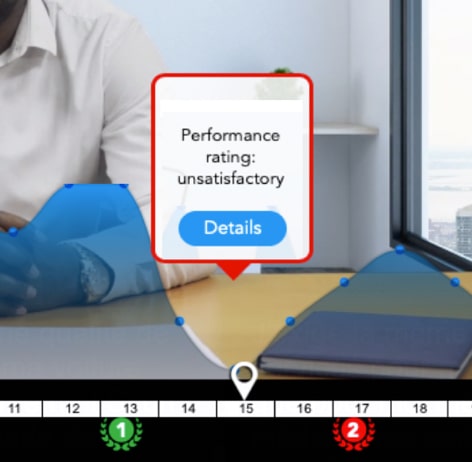

Navigation is ensured by the bottom bar, where each step is a rectangle and, at the far left, you can find the play/pause button.

Deciding which steps to focus on depends on the scope of your analysis; you may want to look at those steps:

- Where you performed very badly, to learn what happened and find a way to improve

- Where you performed very well, to double down on those best practices

- Where your behavior impacted the competencies you most need to improve on

In all of the above cases, the blue curve just above the bottom bar can give you a good idea of what to look at in the search for the right step to analyze.

Once you find the step, you can either:

- Open the detailed view showing which behavior was behind the sentence you chose at that step

- Open the detailed view showing the body language of the character while listening to your sentence

- Open the detailed view showing the character’s answer and summarizing the entire step in terms of your behavior, the character’s answer and the impact of the step on the overall trend of the conversation

From competency to conversation and back

I opened this article focusing on how trainees can connect the dots between a competency model and their daily behaviors.

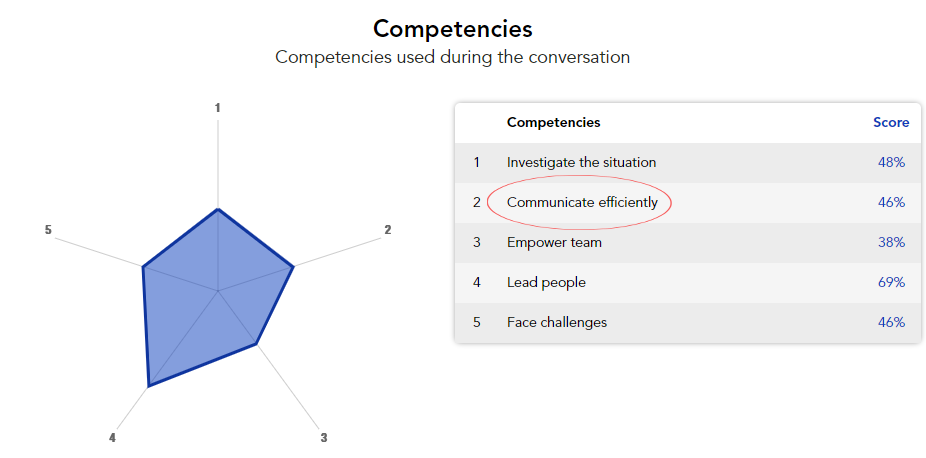

So let’s look at a few passages in order to learn about this point from SkillGym. Imagine that your competency map looks like this:

You certainly want to learn why you scored low, for example, on the competency called “Communicate efficiently”.

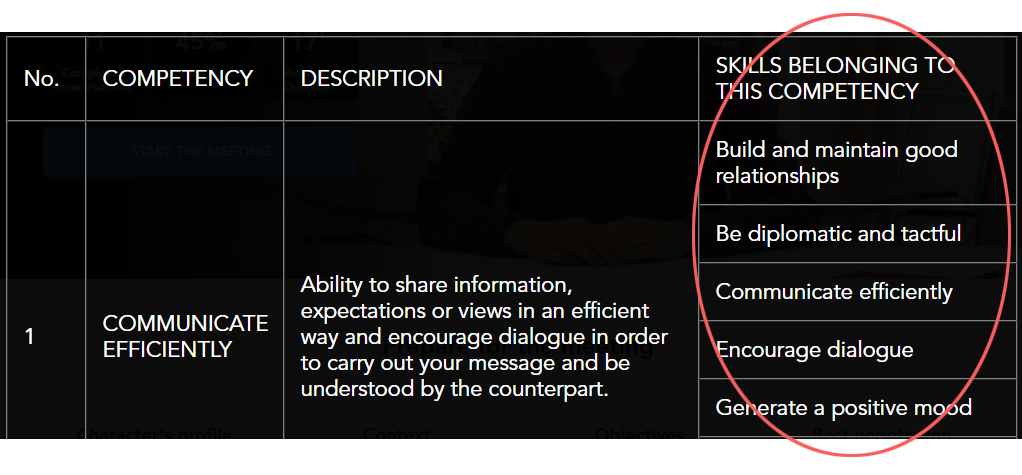

The first step is to look at which behaviors are connected to that specific competency.

You can see this in the SkillGym Launcher.

The next step is to open the Augmented Replay and look at the steps where those behaviors were associated with your sentences.

In our example, at step 15:

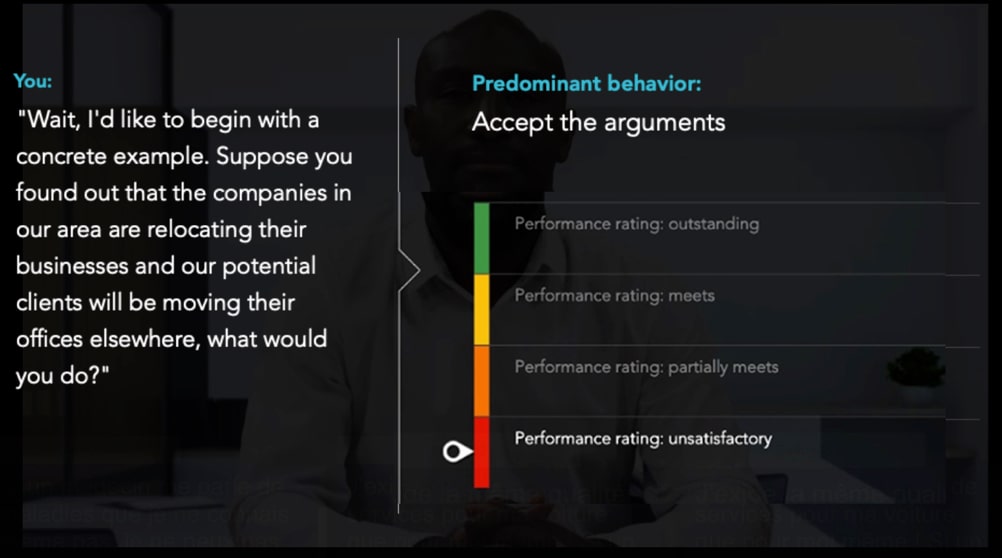

It looks like your sentence did not perform well on the behavior called “Accept arguments.”

So the next thing to do is to enter into the details to discover which sentence of yours underperformed that way:

At this point, you have a clear connection between the score of one behavior connected to a specific competency and your actual choice in the simulation.

You can also delve deeper here:

- For example, looking at the body language of the character while listening to that sentence, or

- Analyzing the impact of your specific behavior on the character’s next reaction

All of these triggers will help you to turn any abstract concept of a competency model description into a very real and tangible daily action, the same as talking with your employees in a certain way versus another.

Of course, several behaviors will impact a single competency and that would happen in more than a single step of the conversation.

Thus, I would recommend focusing on one specific competency at a time and looking at all the possible interactions you had during that interview that somehow affected that specific KPI.

Using the Augmented Replay for this purpose is a fantastic way to gain and improve self-awareness, but also the understanding of how close your daily behaviors are to your competency model.

Of course, we would be delighted to show you SkillGym’s solution in a 1-hour discovery call.